AWARD YEAR

2023

CATEGORY

Body

GOALS

Good Health & Well-being, Reduced Inequality

KEYWORDS

artificial intelligence, Visually Impaired, navigation

COUNTRY

United States of America

DESIGNED BY

Sama & Google

WEBSITE

https://www.sama.com/blog/google-project-guideline/

Project Guideline

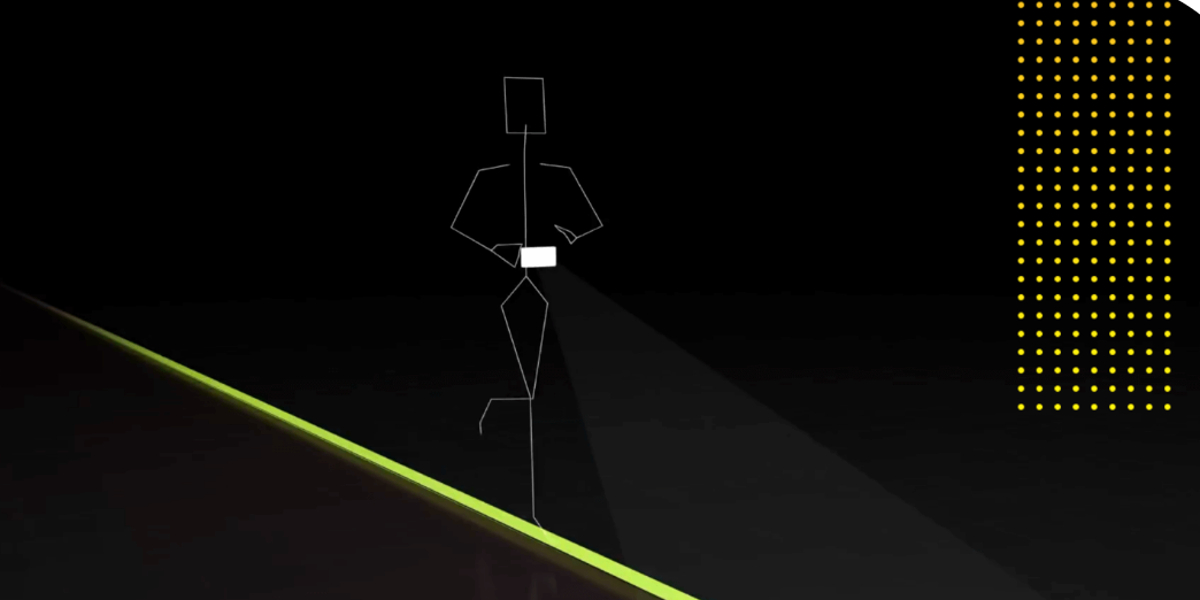

A device that can help people with reduced vision to walk and run for exercise independently.

How does it work?

The machine learning algorithm developed by Google requires only a line painted on a pedestrian path. Runners wear a regular Android phone in a harness around the waist and count on the camera to feed imagery to the algorithm, which identifies the painted line. The algorithm is tasked with detecting whether the line is to the runner’s left, right, or center, so audio signals can be sent to the runner to guide them to stay on track.

Sama’s expert annotators draw precise polygons around the single solid yellow lines and a single center line in the images. To do this, the Sama team underwent an intensive training process and continues to meet with Google’s engineers each week to check in on quality, discuss edge cases, and receive new instructions. Thus, with enough examples to learn from, the algorithm is trained to distinguish the pixels in the yellow line from everything else.

Why is it needed?

Humans who are visually impaired have no tools to run or walk independently and are forced to use guide dogs and volunteer human guides to run and exercise with them.

How does it improve life?

The design is life-changing for visually impaired people who has never had the opportunity to run or handling a navigation experience for the blind.