AWARD YEAR

2023

CATEGORY

Play & Learning

GOALS

Balance Human & Artificial Intelligence

KEYWORDS

DIVERSITY

COUNTRY

United States of America

DESIGNED BY

Google

WEBSITE

https://store.google.com/intl/en/ideas/real-tone/

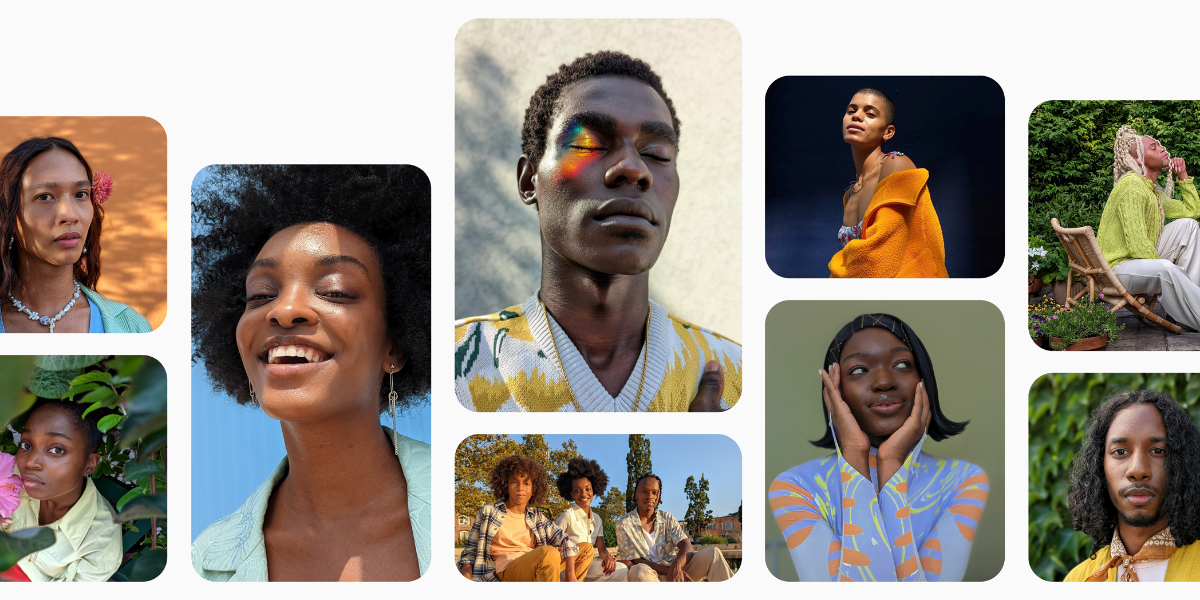

Real Tone

Representing the nuances of different skin tones for all people, beautifully and authentically

How does it work?

To make a great portrait, your phone first has to see a face in the picture. So, we trained our face detector models to “see” more diverse faces in more lighting conditions. Auto-white balance models help determine color in a picture. Our partners helped us hone these models to better reflect a variety of skin tones. Similarly, we made changes to our auto-exposure tuning to balance brightness and ensure your skin looks like you – not unnaturally brighter or darker.

Why is it needed?

Skin tone bias in artificial intelligence and cameras is well documented. And the effects of this bias are real: Too often, folks with darker complexions are rendered unnaturally – appearing darker, brighter, or more washed out than they do in real life. In other words, they’re not being photographed authentically. Stray light shining in an image can make darker skin tones look washed out, so we developed an algorithm to reduce its negative effects in your pictures.

How does it improve life?

That’s why Google has been working on Real Tone. The goal is to bring accuracy to cameras and the images they produce. Real Tone is not a single technology, but rather a broad approach and commitment – in partnership with image experts who have spent their careers beautifully and accurately representing communities of color – that has resulted in tuning computational photography algorithms to better reflect the nuances of diverse skin tones.

It’s an ongoing process. Lately, we’ve been making improvements to better identify faces in low-light conditions. And with Pixel 7, we’ll use a new color scale that better reflects the full range of skin tones.